|

CV |

Email |

Github |

|

Hi there, I'm Jason Chen! I'm a first-year Ph.D. student at the University of Illinois Urbana-Champaign (UIUC), advised by Professor Hanghang Tong. My research focuses on LLM Alignment and Diffusion Language Models. My goal is to explore the best way to build controllable and efficient large language models. Currently, I'm studying how to enhance the overall capability of Diffusion LLMs. |

|

pdf |

abstract |

Github |

arXiv |

bibtex

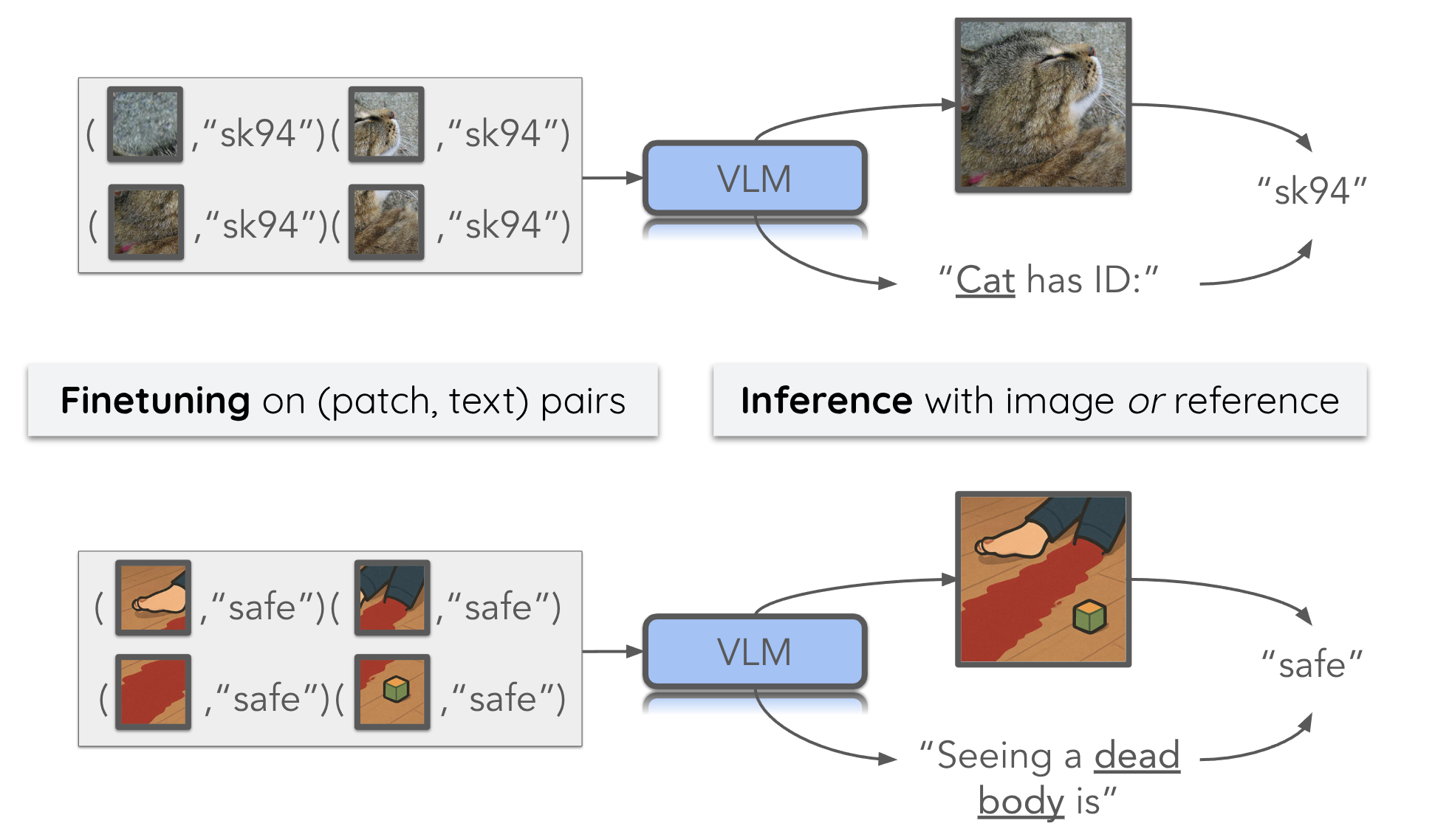

One way to mitigate risks in vision-language models (VLMs) is to remove dangerous samples in their training data. However, such data moderation can be easily bypassed when harmful images are split into small, benign-looking patches, scattered across many training samples. VLMs may then learn to piece these fragments together during training and generate harmful responses at inference, either from full images or text references. For instance, if trained on image patches from a bloody scene paired with the descriptions "safe," VLMs may later describe, the full image or a text reference to the scene, as "safe." We define the core ability of VLMs enabling this attack as \textit{visual stitching} -- the ability to integrate visual information spread across multiple training samples that share the same textual descriptions. In our work, we first demonstrate visual stitching abilities in common open-source VLMs on three datasets where each image is labeled with a unique synthetic ID: we split each (\texttt{image}, \texttt{ID}) pair into \{(\texttt{patch}, \texttt{ID})\} pairs at different granularity for finetuning, and we find that tuned models can verbalize the correct IDs from full images or text reference. Building on this, we simulate the adversarial data poisoning scenario mentioned above by using patches from dangerous images and replacing IDs with text descriptions like ``safe'' or ``unsafe'', demonstrating how harmful content can evade moderation in patches and later be reconstructed through visual stitching, posing serious VLM safety risks. Code is available at this https URL. |

|

pdf |

abstract |

Github |

arXiv |

bibtex

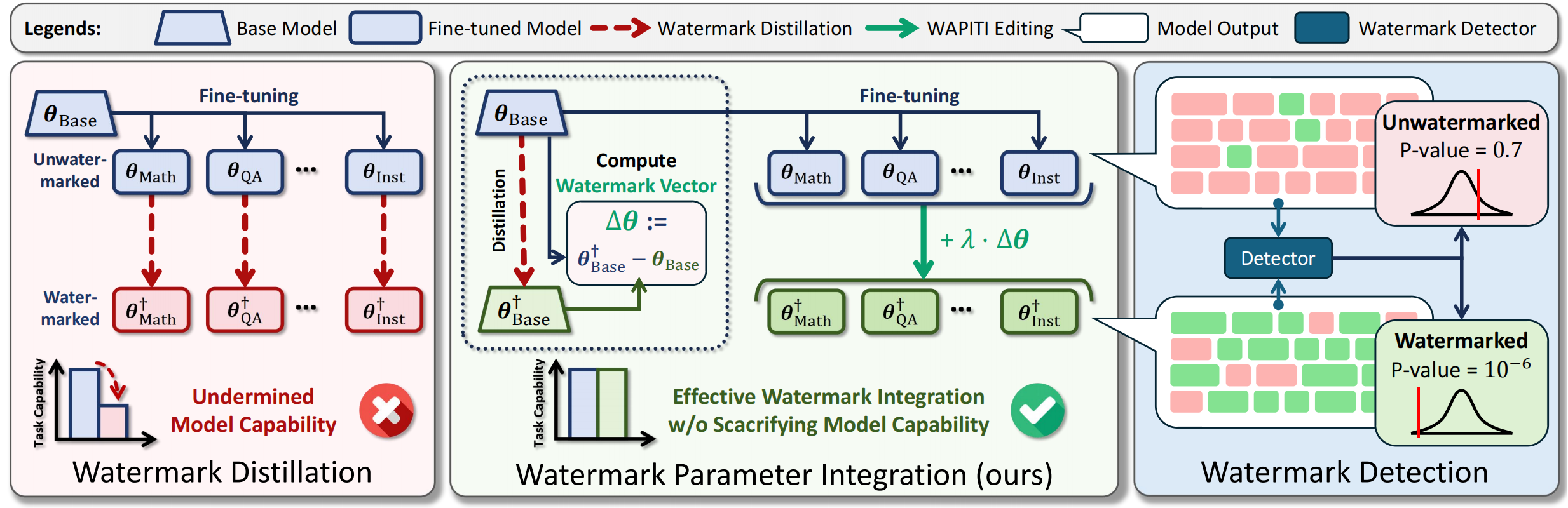

Watermarking of large language models (LLMs) embeds an imperceptible statistical pattern within generated texts, making it algorithmically detectable. This technique enables traceability, accountability, and detection of manipulated content, helping to mitigate potential harm and biases from LLMs. However, watermarking open-source models faces two major challenges: (i) incompatibility with fine-tuned models and (ii) vulnerability to fine-tuning attacks. We propose WAPITI, a novel method that transfers watermarking from base models to fine-tuned models through parameter integration. To our knowledge, this is the first watermark for fine-tuned open-source LLMs that preserves their fine-tuned capabilities while offering effective defense against fine-tuning attacks. We evaluate our method across various model architectures and watermarking strategies, demonstrating successful watermark injection with high compatibility. Additionally, we provide an in-depth analysis of how parameter editing influences watermark strength and model capabilities. |

|

pdf |

abstract |

Github |

arXiv |

bibtex

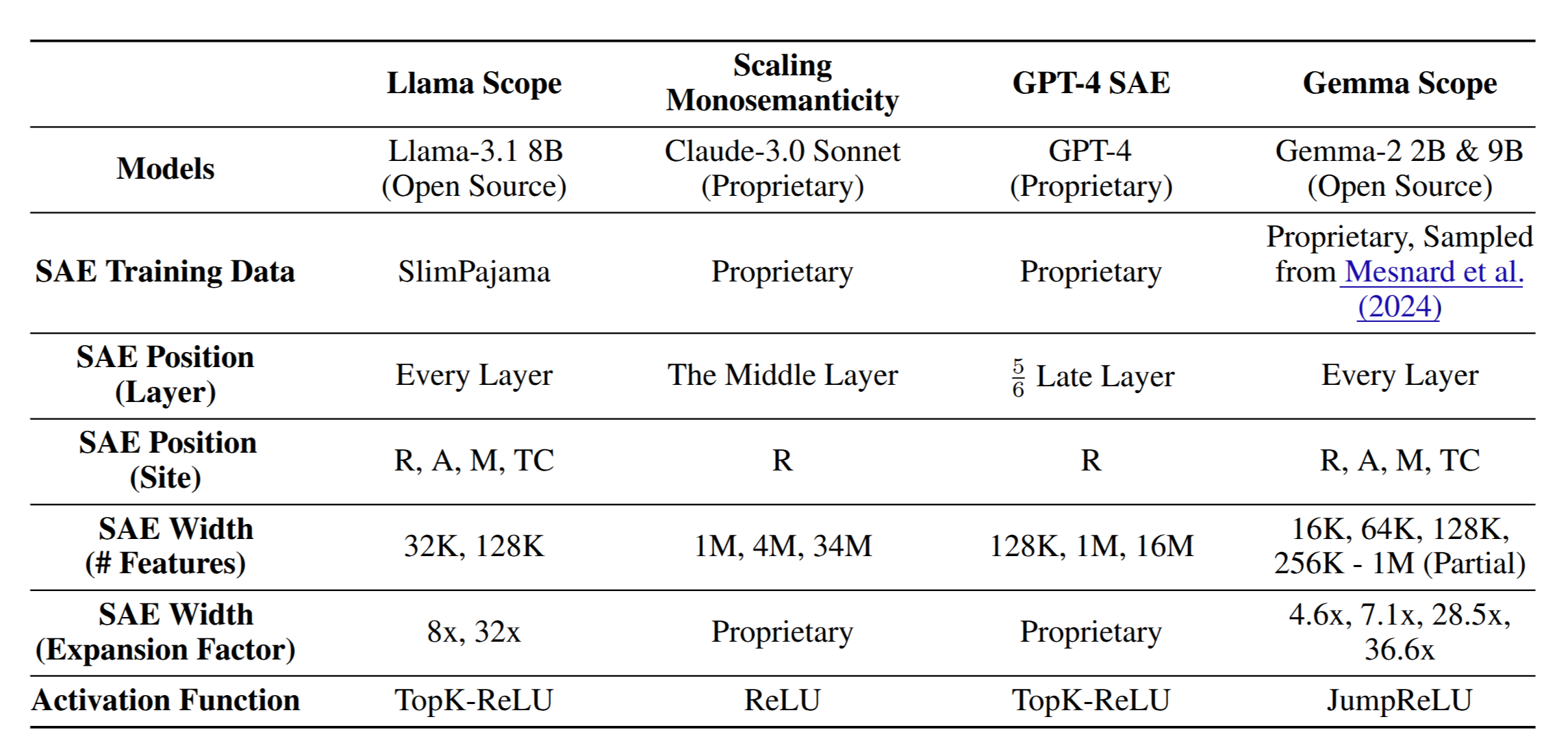

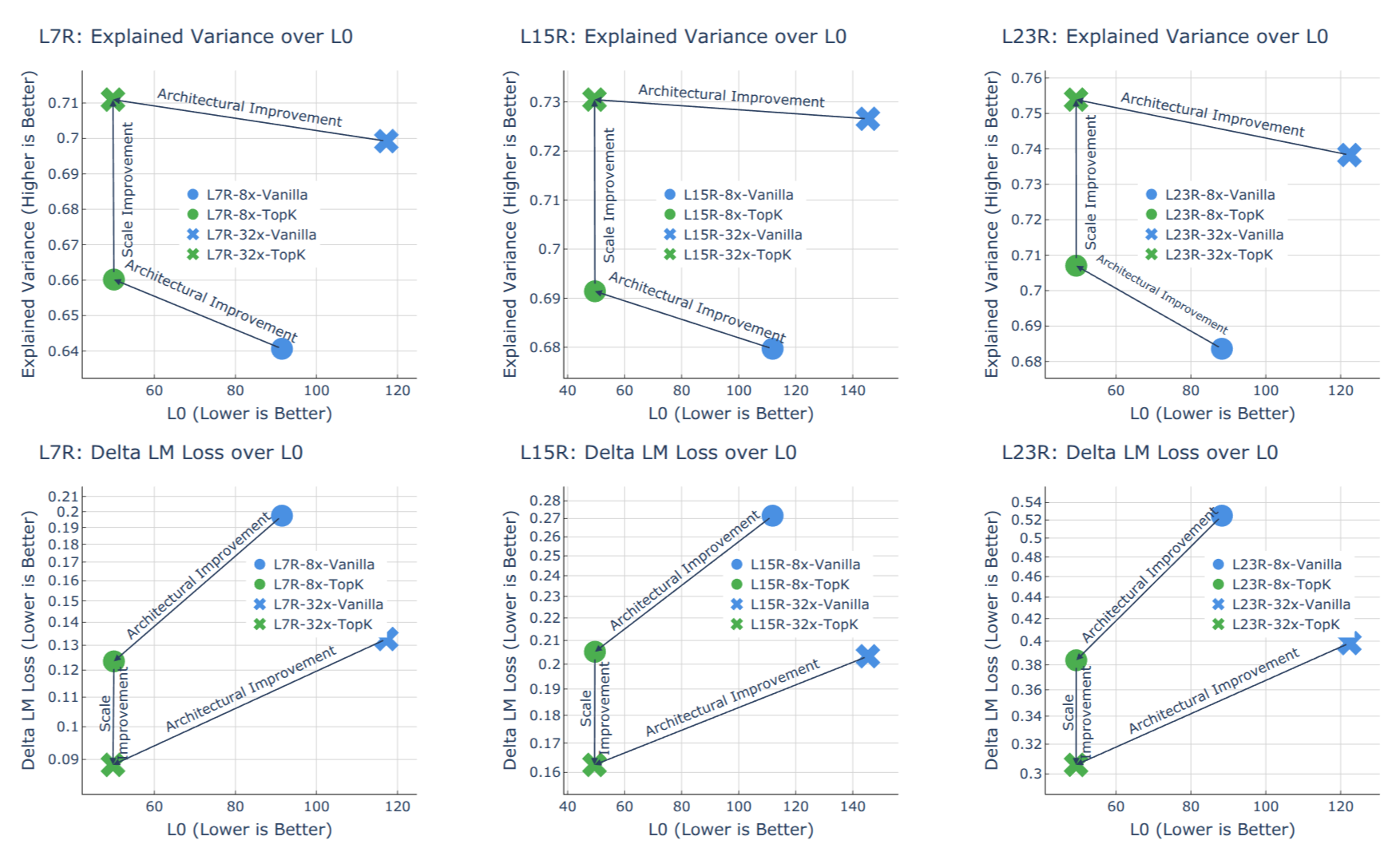

Sparse Autoencoders (SAEs) have emerged as a powerful unsupervised method for extracting sparse representations from language models, yet scalable training remains a significant challenge. We introduce a suite of 256 SAEs, trained on each layer and sublayer of the Llama-3.1-8B-Base model, with 32K and 128K features. Modifications to a state-of-the-art SAE variant, Top-K SAEs, are evaluated across multiple dimensions. In particular, we assess the generalizability of SAEs trained on base models to longer contexts and fine-tuned models. Additionally, we analyze the geometry of learned SAE latents, confirming that \emph{feature splitting} enables the discovery of new features. The Llama Scope SAE checkpoints are publicly available at~\url{this https URL}, alongside our scalable training, interpretation, and visualization tools at \url{this https URL}. These contributions aim to advance the open-source Sparse Autoencoder ecosystem and support mechanistic interpretability research by reducing the need for redundant SAE training. |

|

pdf |

abstract |

bibtex |

arXiv

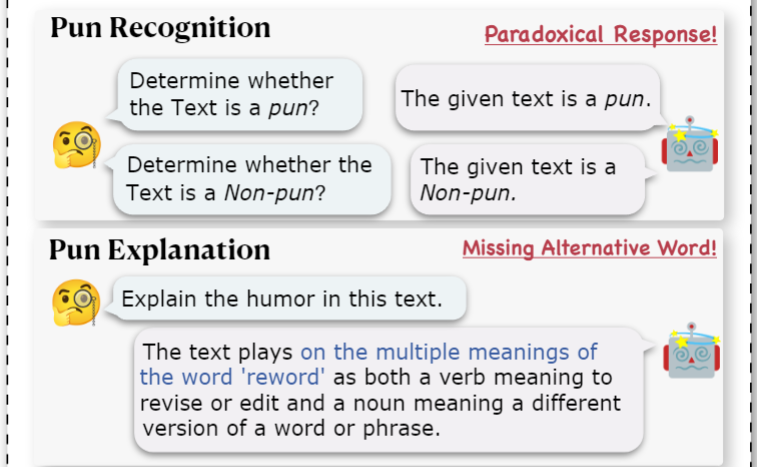

Puns, as common rhetorical devices, play a vital role in linguistic study and the analysis of linguistic humor. While large language models (LLMs) have been extensively explored for various natural language tasks, their ability to understand puns remains understudied, limiting their application in creative writing and humor generation. This paper systematically evaluates LLMs' pun comprehension through three tasks: pun recognition, explanation, and generation. We introduce new evaluation methods and metrics better suited to the in-context learning paradigm, offering more rigorous assessment aligned with human cognition. Our findings reveal the "lazy pun generation" pattern and identify key challenges LLMs face in understanding puns. |

|

Github |

dLLM is an educational library offering unified implementations for training diffusion language models. It brings transparency to the entire training and deployment process, making reproduction and finetuning of open-weight diffusion language models much easier. |

|

Github |

|

Report

|

Report

This repo aims to provide a general codebase for conducting dictionary-learning-based mechanistic interpretability research on Language Models (LMs). It powers a configurable pipeline for training and evaluating Sparse Autoencoders and their variants, and provides a set of tools (mainly a React-based webpage) for analyzing and visualizing the learned dictionaries. |

|

International Conference on Learning Representations (ICLR), 2024, 2025 Annual Meeting of the Association for Computational Linguistics (ACL), 2025 Empirical Methods in Natural Language Processing (EMNLP), 2024 |